点击右上角![]() 微信好友

微信好友

朋友圈

朋友圈

请使用浏览器分享功能进行分享

As a new international organization focusing on the Internet, theWorld Internet Conference(WIC) is committed to building a global Internet platform for extensive consultation, joint contribution and shared benefits. In order to advocate technology-for-social-good concept, guide global parties concerned to use AI technology to bridge the digital divide and promote human well-being, WIC launchedthe World Internet Conference AI for Social Good Action Planand solicited the projects globally. The first round of 33 projects received from 23 enterprises and organizations cover cultural exchanges, biodiversity conservation, medical health, accessible transformation, education and training, livable environment and other fields. These projects reflect the development trend where close combination of voice recognition, image recognition, natural language processing, situational awareness and other AI technologies with specific social good scenarios provides more diversified solutions to constantly improving social welfare capabilities.

Microsoft uses speech synthesis technologyto make audio books more convenient.In collaboration with Microsoft, Hongdandan Cultural Service Center for Visual Impairment utilizes deep neural network voice synthesis and voice adapation technology to create a more realistic voice for audio books with multiple tones and emotions, making the voice of audio content no longer singular. Furthermore, Microsoft’s open platform for text-to-speech conversion is able to shorten the production time of an audio book from three months to hundreds milliseconds, thus significantly reducing the labor and time costs, breaking down the barriers to the production of audio content, and delivering a "warmer" reading experience to visually impaired people.

In collaboration with Google, LLVision’saccessible AR glasses help hearing-impairedpeople have equal access to employmentopportunities.LLVision has developed accessible AR glassess for hearing-impaired people by using Augmented Reality (AR), speech recognition, machine translation and other technologies. The product helps hearing-impaired individuals to overcome communication difficulty due to impaired hearing by converting speech into text and displaying it in front of eyes and thus enables the hearing-impaired to improve the efficiency in such scenarios as information acquisition, communication quality, psychological cognition, learning and training, and job interviews. With the support of the Google Overseas Entrepreneurship Accelerator Program, LLVision applied Google Cloud’s global solutions to products aimed at overseas users to significantly reduce background noise and optimize speech processing, achieve smoother speech transcription and translation functions.

Starbucks uses AI technology to assist the hearing-impaired baristas to work in sign language stores.Starbucks and Microsoft jointly developed the Angel Partner Intelligent Assistant System, which is built on a variety of Microsoft-specific cognitive service technologies, such as deep neural network speech synthesis, natural language processing, AI self-learning, as well as Microsoft Intelligent Cloud and IoT technology. Starbucks uses the system to help hearing-impaired baristas communicate with customers, provide an accessible working environment for baristas with hearing impairments and bring an accessible ordering experience to consumers, thus creating inclusive interpersonal communication scenarios for hearing-impaired individuals and their communities.

Alibaba DAMO Academy-developed digitalhuman realizes human sign language actionrecognition and translation.By combining computer vision, machine translation, voice technology, 3D virtual humans and other technologies, Alibaba DAMO Academy-developed digital human realizes the leading pure vision recognition algorithm, captured the spatio-temporal information of the sign language actions of the hearing impaired in real scenes, achieving two-way translation between natural language and sign language through understanding and translation of sign language digital human "Xiao Mo". Xiao Mo’s sign language translation enables the hearing-impaired to not only better communicate and inquire through sign language, but also better "see" sign language broadcast notifications in public places such as airports and railway stations, read online news, synchronously watch news on video platforms, watch sign language videos at tourist attractions to learn more about history and culture.

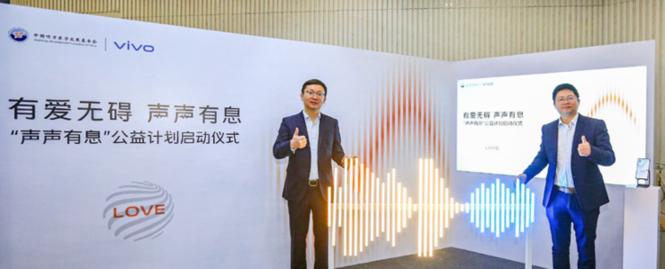

Vivo realizes voice and video accessible calls andambient sound perception.Vivo utilizes voice recognition and speech synthesis technology to launch the "accessible call function", which enables speech-to-text conversion in call scenarios and social platform-based video scenarios, helping hearing-impaired subscribers to have smooth phone or video connections with their family and friends. "Vivo Listening and Speaking" helps hearing-impaired subscribers communicate smoothly face-to-face, understand and speak clearly, and with optimization measures such as polyphonic characters, rhythm and emotional tone, enabling hearing-impaired people to better express their emotions. Based on speech enhancement and speech recognition technology developed by vivo, the voice recognition rate exceeds 90%. The "sound recognition" function utilizes ambient sound perception technology, combined with mobile phones or other peripheral devices, to convert baby crying, smoke alarm sound, car horn sound, etc. into perceotible visual and tactile signals, helping the hearing-impaired identify important and safety-related sounds in their lives.

Tencent’s algorithm empowers hearing aid manufacturers to solve the problem of "unclear hearing".Although people with hearing disabilities can regain their hearing through hearing aids, they still find it hard to hear clearly in noisy environments. Based on Psychoacoustics’ pronunciation and hearing models, Tencent integrates perceptual coding, classic voice signals and deep learning technology with audio processing and encoding/decoding systems to provide the hearing impaired with high-definition, pure and smooth audio communication experience. This technology has been open to developers, manufacturers and partners in the field of social responsibility for such scenarios as cochlear implant and hearing aid noise reduction, AI hearing aid and caption recognition optimization, to jointly improve the noise reduction effect of hearing aids equipment and improve the use experience of the wearers.

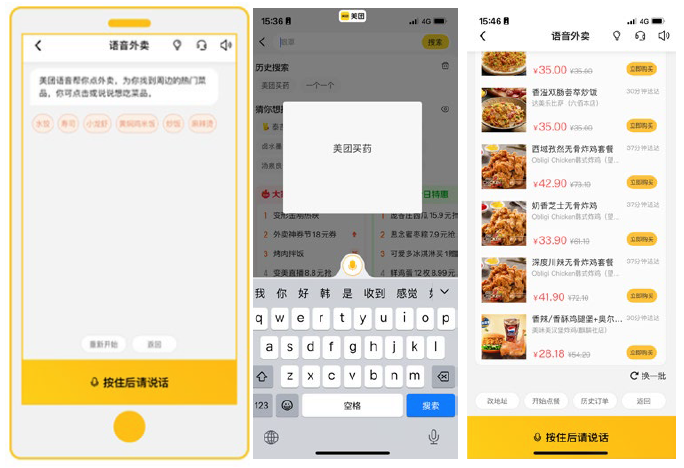

Meituan utilizes voice recognition and processing technology to carry out elderly-oriented transformation.Meituan uses voice recognition and processing technology to transform complex operations such as typing in, product selection, ordering and payment for the elderly into voice commands. Elderly subscribers only need to speak out "place an order" and other instructions to complete online consultation with pharmacists and purchase medicine, helping the elderly enjoy the convenience brought by intelligent services.

(编辑:穆子叶 孔繁鑫)